BackupPC

| |

| Developer(s) | Craig Barratt |

|---|---|

| Initial release | September 21, 2001 |

| Stable release |

3.3.1

/ January 11, 2015 |

| Preview release |

4.0.0alpha3

/ December 1, 2013 |

| Repository |

sourceforge |

| Written in | Perl |

| Operating system | Cross-platform |

| Type | Backup |

| License | GPL 2 |

| Website |

backuppc |

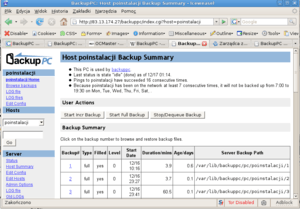

BackupPC is a free disk-to-disk backup software suite with a web-based frontend. The cross-platform server will run on any Linux, Solaris, or UNIX-based server. No client is necessary, as the server is itself a client for several protocols that are handled by other services native to the client OS. In 2007, BackupPC was mentioned as one of the three most well known open-source backup software,[1] even though it is one of the tools that are "so amazing, but unfortunately, if no one ever talks about them, many folks never hear of them" [2]

Data deduplication reduces the disk space needed to store the backups in the disk pool. It is possible to use it as D2D2T solution, if the archive function of BackupPC is used to back up the disk pool to tape. BackupPC is not a block-level backup system like Ghost4Linux but performs file-based backup and restore. Thus it is not suitable for backup of disk images or raw disk partitions.[3]

BackupPC incorporates a Server Message Block (SMB) client that can be used to back up network shares of computers running Windows. Paradoxically, under such a setup the BackupPC server can be located behind a NAT'd firewall while the Windows machine operates over a public IP address. While this may not be advisable for SMB traffic, it is more useful for web servers running Secure Shell (SSH) with GNU tar and rsync available, as it allows the BackupPC server to be stored in a subnet separate from the web server's DMZ.

It is published under the GNU General Public License.

Protocols supported

BackupPC supports NFS, SSH, SMB and rsync.[4]

It can back up Unix-like systems with native ssh and tar or rsync support, such as Linux, BSD, and OS X, as well as Microsoft Windows shares with minimal configuration.[5]

On Windows, third party implementations of tar, rsync, and SSH (such as Cygwin) are required to utilize those protocols.[6]

Protocol choice

The choice between tar and rsync is dictated by the hardware and bandwidth available to the client. Clients backed up by rsync use considerably more CPU time than client machines using tar or SMB. Clients using SMB or tar use considerably more bandwidth than clients using rsync. These trade-offs are inherent in the differences between the protocols. Using tar or SMB transfers each file in its entirety, using little CPU but maximum bandwidth. The rsync method calculates checksums for each file on both the client and server machines in a way that enables a transfer of just the differences between the two files; this uses more CPU resources, but minimizes bandwidth.[7]

Data storage

BackupPC uses a combination of hard links and compression to reduce the total disk space used for files. At the first full backup, all files are transferred to the backend, optionally compressed, and then compared. Files that are identical are hard linked, which uses only one additional directory entry. The upshot is that an astute system administrator could potentially back up ten Windows XP laptops with 10 GB of data each, and if 8 GB is repeated on each machine (Office and Windows binary files) would look like 100 GB is needed, but only 28 GB (10 × 2 GB + 8 GB) would be used.[8] Compression of the data on the back-end will further reduce that requirement.

When browsing the backups, incremental backups are automatically filled back to the previous full backup. So every backup appears to be a full and complete set of data.

Performance

- When backing up a remote SMB share, speeds of 3–4 Mbit/s are normal .

- When backing up a remote Linux PC by rsync (over an SSH tunnel) on a 1 gigabit LAN, speeds of over 100 megabytes/sec are possible when doing a full backup.

- Backup speed is greatly dependent on the underlying hardware (both server and client), backup protocol, and the characteristics of the data being backed up.

- Because full backups transfer everything, incremental backups show much diminished MB/s transferred because comparatively larger amounts of time are spent determining which files have changed than actually transferring bits.

Forks and related projects

- BackupAFS is a version of BackupPC patched to back up AFS or OpenAFS volumes to a backup server's local disk or attached RAID. It supports all BackupPC features including full and multi-level incremental dumps, exponential expiry, compression, and configuration via conf files or a web interface. Backup speed is dependent on the underlying hardware, however when performing full backups of multi-gigabyte AFS volumes using modern hardware, speeds of up to 60 megabytes per second are not uncommon over gigabit ethernet.

- BackupPC SME Contrib is an add-on to SME Server that allows integration of BackupPC into the SME templated UI.

- Zmanda's community edition of BackupPC has added the ability to use FTP, as well as other patches that are part of the 3.2.0 version of mainline.

See also

References

- ↑ W. Curtis Preston (2007) Backup and Recovery O'Reilly Media, ISBN 978-0-596-10246-3

- ↑ Shawn Powers: Linux Journal: BackupPC Linux Journal, March 17, 2011.

- ↑ Falko Timme: Back Up Linux And Windows Systems With BackupPC, January 2007. Retrieved July 30, 2010.

- ↑ Shawn Powers: Linux Journal: BackupPC Linux Journal, March 17, 2011.

- ↑ Don Harper: BackupPC – Backup Central, May 2008. Retrieved July 30, 2010.

- ↑ Mike Petersen: Deploying BackupPC on SLES. February, 2008. Retrieved 30 Jul. 2010.

- ↑ Andrew Tridgell: Efficient Algorithms for Sorting and Synchronization, February 1999. Retrieved September 29, 2009.

- ↑ http://backuppc.sourceforge.net/faq/BackupPC.html#how_much_disk_space_do_i_need