Rectifier (neural networks)

In the context of artificial neural networks, the rectifier is an activation function defined as

where x is the input to a neuron. This is also known as a ramp function and is analogous to half-wave rectification in electrical engineering. This activation function is first introduced to a dynamical network by Hahnloser et al. in a 2000 paper in Nature [1] with strong biological motivations and mathematical justifications .[2] It has been used in convolutional networks [3] than the widely used logistic sigmoid (which is inspired by probability theory; see logistic regression) and its more practical[4] counterpart, the hyperbolic tangent. The rectifier is, as of 2015, the most popular activation function for deep neural networks.[5]

A unit employing the rectifier is also called a rectified linear unit (ReLU).[6]

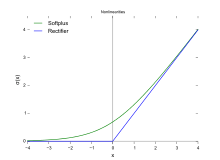

A smooth approximation to the rectifier is the analytic function

which is called the softplus function.[7] The derivative of softplus is , i.e. the logistic function.

Rectified linear units find applications in computer vision[3] and speech recognition[8][9] using deep neural nets.

Variants

Noisy ReLUs

Rectified linear units can be extended to include Gaussian noise, making them noisy ReLUs, giving[6]

- , with

Noisy ReLUs have been used with some success in restricted Boltzmann machines for computer vision tasks.[6]

Leaky ReLUs

Leaky ReLUs allow a small, non-zero gradient when the unit is not active.[9]

Parametric ReLUs take this idea further by making the coefficient of leakage into a parameter that is learned along with the other neural network parameters.[10]

Note that for , this is equivalent to

and thus has a relation to "maxout" networks.[10]

Advantages

- Biological plausibility: One-sided, compared to the antisymmetry of tanh.

- Sparse activation: For example, in a randomly initialized network, only about 50% of hidden units are activated (having a non-zero output).

- Efficient gradient propagation: No vanishing or exploding gradient problems.

- Efficient computation: Only comparison, addition and multiplication.

- Scale-invariant: .

For the first time in 2011,[3] the use of the rectifier as a non-linearity has been shown to enable training deep supervised neural networks without requiring unsupervised pre-training. Rectified linear units, compared to sigmoid function or similar activation functions, allow for faster and effective training of deep neural architectures on large and complex datasets.

Potential problems

- Non-differentiable at zero: however it is differentiable anywhere else, including points arbitrarily close to (but not equal to) zero.

- Non-zero centered

- Unbounded : Could potentially blow up.

- Dying Relu problem for high learning rates.

See also

- Softmax function

- Sigmoid function

- Tobit model

- Batch Normalization

References

- ↑ R Hahnloser, R. Sarpeshkar, M A Mahowald, R. J. Douglas, H.S. Seung (2000). Digital selection and analogue amplification coesist in a cortex-inspired silicon circuit. Nature. 405. pp. 947–951.

- ↑ R Hahnloser, H.S. Seung (2001). Permitted and Forbidden Sets in Symmetric Threshold-Linear Networks. NIPS 2001.

- 1 2 3 Xavier Glorot, Antoine Bordes and Yoshua Bengio (2011). Deep sparse rectifier neural networks (PDF). AISTATS.

- ↑ Yann LeCun, Leon Bottou, Genevieve B. Orr and Klaus-Robert Müller (1998). "Efficient BackProp" (PDF). In G. Orr and K. Müller. Neural Networks: Tricks of the Trade. Springer.

- ↑ LeCun, Yann; Bengio, Yoshua; Hinton, Geoffrey (2015). "Deep learning". Nature. 521: 436–444. doi:10.1038/nature14539.

- 1 2 3 Vinod Nair and Geoffrey Hinton (2010). Rectified linear units improve restricted Boltzmann machines (PDF). ICML.

- ↑ C. Dugas, Y. Bengio, F. Bélisle, C. Nadeau, R. Garcia, NIPS'2000, (2001),Incorporating Second-Order Functional Knowledge for Better Option Pricing.

- ↑ László Tóth (2013). Phone Recognition with Deep Sparse Rectifier Neural Networks (PDF). ICASSP.

- 1 2 Andrew L. Maas, Awni Y. Hannun, Andrew Y. Ng (2014). Rectifier Nonlinearities Improve Neural Network Acoustic Models

- 1 2 Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun (2015) Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification